How do you know your YAML manifests are configured correctly? How do you know you are following the best practices when deploying your microservices to your Kubernetes cluster? Usually, this is a learning process that requires writing lots, and lots of YAML — deploying those manifests — and experiencing what works, what does not, and where are you encountering errors.

However, there are several tools that can make it easier for you to write thorough and secure Kubernetes Manifests. One of those tools is Datree. Datree helps us to prevent Kubernetes misconfigurations from reaching production. This is done by comparing your Kubernetes manifests with the best practices from the Kubernetes release and the experience across countless organisations.

If you would like to learn more about getting started with Datree, have a look at the following YouTube tutorial and the documentation. Datree makes it extremely easy to get started in just two commands.

In the video linked above, I showcased how you can define your Kubernetes Policies within the Datree UI Dashboard. This method is pretty straightforward; you can enable and disable policies, set the description of policies and tag people's GitHub account or add links for further information.

A lot of DevOps -related work is done in the Terminal. With methodologies such as Infrastructure as Code and GitOps gaining popularity, DevOps Engineers, Developers and administrators aim to define all resources as Code and track the versioning in Git.

Datree allows us to do exactly this — define our Policy as Code.

Resulting, instead of having to update all the policies within the UI, we can define our Kubernetes Policies in YAML and apply those to our Datree account.

You can view the Video recording of this tutorial here:

How does that work?

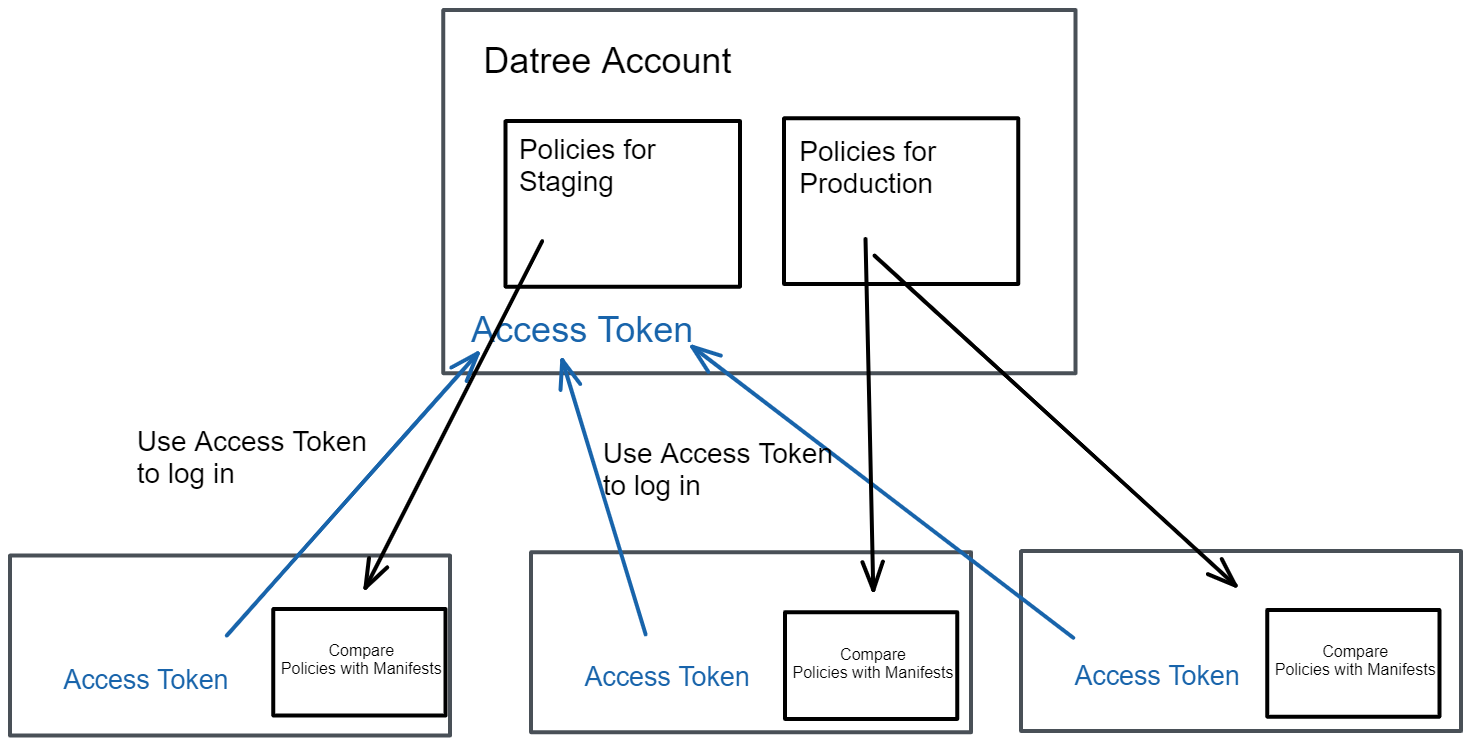

Upon using Datree, an account is created for you with a unique Account Token. You enable other team members to access the account by sharing the account token.

This makes it possible for Datree to manage all your account information centrally. Resulting, you can access the same information whether you are using Datree locally in your terminal or through a CI/CD platform, such as GitHub Actions.

The below diagram provides an overview:

Policy as Code

In this section, we are going to

- Access our Datree account & download the default policy file

- Modify the Policies for two different environments, staging and production

- Apply our policy configuration

- Test our policy configuration for both environments with the same YAML Manifests

Access Account

If you do not have Datree installed yet, go ahead and install the CLI by running the following command in your terminal:

curl <https://get.datree.io> | /bin/bash

For different installation instructions, please have a look at the Datree documentation.

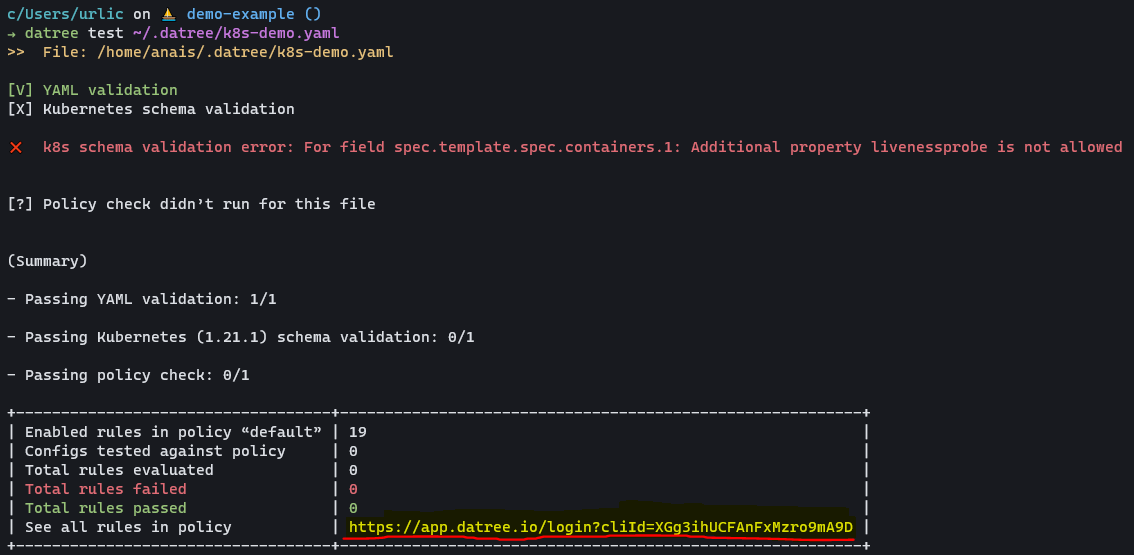

When you run the example test command, you will be provided with the link to the UI Dashboard:

datree test ~/.datree/k8s-demo.yaml

Benefits

- All information is recorded in Git

- You can easily share Policies with team members

- It enables you to set different policy settings per environment

Next, open the link that is highlighted in the above screenshot. Your link will likely be slightly different.

Note that if the Dashboard does not open right away, try to press ctrl + click on the link.

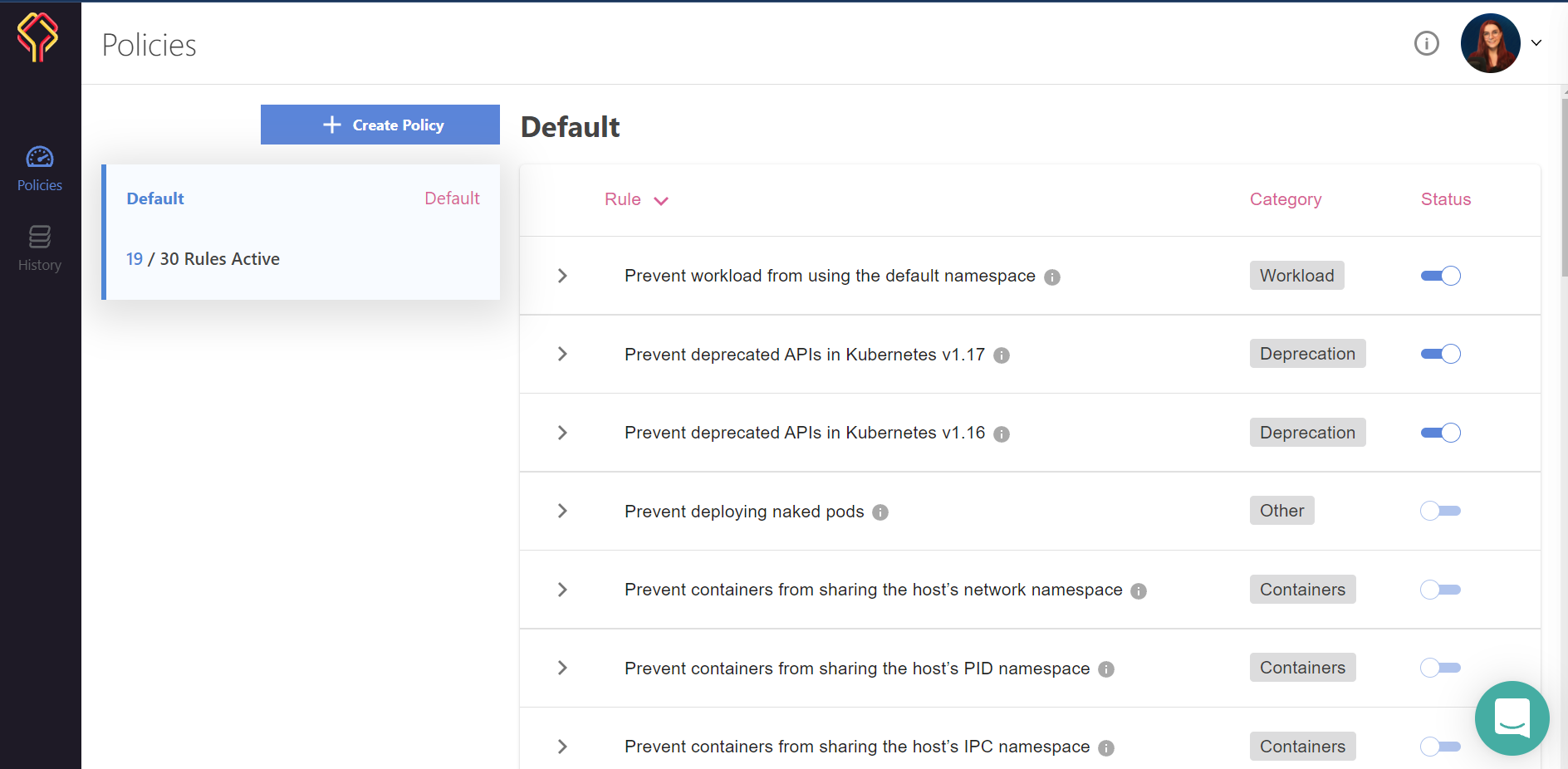

This will take us to the Datree Dashboard:

As you can see, I currently have only one policy defined. This policy has 19 rules. I can toggle and untoggle, as well as edit rules from the UI.

If you are following GitOps best practices, you would only want to allow users to change the settings through starting a PR in Git. If someone was to modify policies through the UI while you keep your policies in Git, it would cause a lot of confusion.

Thus, we need to tell Datree that we want to use just Policies as Code without allowing edits through the UI.

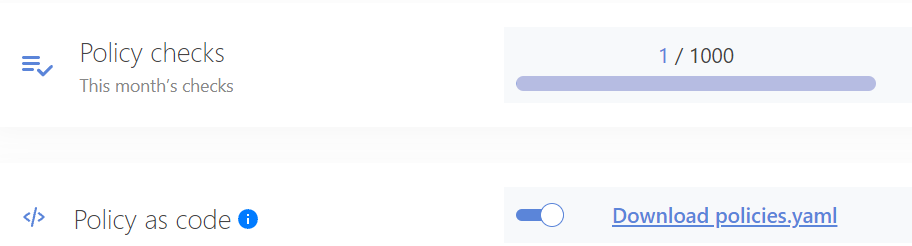

To enable Policy as Code, head over to Your Account (top left) > Settings; next**:**

- Enable Policy as Code

- Download the policies.yaml file

This file will contain all the default policies. You can also see an example in the documentation.

Modify and apply policies

Below is an example section from the policies.yaml file:

apiVersion: v1

policies:

- name: Default

isDefault: true

rules:

- identifier: CONTAINERS_MISSING_IMAGE_VALUE_VERSION

messageOnFailure: Incorrect value for key `image` - specify an image version to avoid unpleasant “version surprises” in the future

# - identifier: K8S_INCORRECT_KIND_VALUE_POD

# messageOnFailure: Incorrect value for key `kind` - raw pod won't be rescheduled in the event of a node failure

Let's go through the file:

- name: The name specifies how our policy rule is called. If we have multiple different environments, we have to tell Datree which policies we want to use upon running "datree test"

- isDefault: This option allows us to define which policy is being used if we have multiple defined and do not specify which one to use upon running "datree test"

- identifier: Currently, the identifier is provided by Datree and cannot be changed. Take a look at the documentation to learn more about which rules and related identifiers are available.

- messageOnFailure: This is the message provided to the user to learn more about what went wrong. Note that you can provide links and @GitHub-name to tag people in the message.

- The last two lines are commented out. Either delete or comment out the policies that you currently do not want to use for future reference.

Make sure that each line is indented correctly.

We are going to modify the file for our staging and for our production environment.

policies.yaml

apiVersion: v1

policies:

- name: Staging

isDefault: true

rules:

- identifier: CONTAINERS_MISSING_IMAGE_VALUE_VERSION

messageOnFailure: Incorrect value for key `image` - specify an image version to avoid unpleasant “version surprises” in the future

- identifier: CRONJOB_INVALID_SCHEDULE_VALUE

messageOnFailure: 'Incorrect value for key `schedule` - the (cron) schedule expressions is not valid and therefor, will not work as expected'

- identifier: WORKLOAD_INVALID_LABELS_VALUE

messageOnFailure: Incorrect value for key(s) under `labels` - the vales syntax is not valid so it will not be accepted by the Kuberenetes engine

- identifier: WORKLOAD_INCORRECT_RESTARTPOLICY_VALUE_ALWAYS

messageOnFailure: Incorrect value for key `restartPolicy` - any other value than `Always` is not supported by this resource

- identifier: HPA_MISSING_MAXREPLICAS_KEY

messageOnFailure: Missing property object `maxReplicas` - the value should be within the accepted boundaries recommended by the organization

- identifier: WORKLOAD_INCORRECT_NAMESPACE_VALUE_DEFAULT

messageOnFailure: Incorrect value for key `namespace` - use an explicit namespace instead of the default one (`default`)

- identifier: DEPLOYMENT_INCORRECT_REPLICAS_VALUE

messageOnFailure: Incorrect value for key `replicas` - don't relay on a single pod to do all of the work. Running 2 or more replicas will increase the availability of the service

- identifier: K8S_DEPRECATED_APIVERSION_1.16

messageOnFailure: Incorrect value for key `apiVersion` - the version you are trying to use is not supported by the Kubernetes cluster version (>=1.16)

- identifier: K8S_DEPRECATED_APIVERSION_1.17

messageOnFailure: Incorrect value for key `apiVersion` - the version you are trying to use is not supported by the Kubernetes cluster version (>=1.17)

- identifier: CONTAINERS_INCORRECT_PRIVILEGED_VALUE_TRUE

messageOnFailure: Incorrect value for key `privileged` - this mode will allow the container the same access as processes running on the host

- identifier: CRONJOB_MISSING_CONCURRENCYPOLICY_KEY

messageOnFailure: Missing property object `concurrencyPolicy` - the behavior will be more deterministic if jobs won't run concurrently

- identifier: HPA_MISSING_MINREPLICAS_KEY

messageOnFailure: 'Missing property object `minReplicas` - the value should be within 3 to 5 pods. Please contact @AnaisUrlichs for further information. '

- identifier: SERVICE_INCORRECT_TYPE_VALUE_NODEPORT

messageOnFailure: 'Incorrect value for key `type` - `NodePort` will open a port on all nodes where it can be reached by the network external to the cluster. Please use ClusterIP instead. Contact @AnaisUrlichs for further information. '

- name: Prod

isDefault: false

rules:

- identifier: CONTAINERS_MISSING_IMAGE_VALUE_VERSION

messageOnFailure: Incorrect value for key `image` - specify an image version to avoid unpleasant “version surprises” in the future

- identifier: K8S_INCORRECT_KIND_VALUE_POD

messageOnFailure: Incorrect value for key `kind` - raw pod won't be rescheduled in the event of a node failure

- identifier: CONTAINERS_MISSING_MEMORY_REQUEST_KEY

messageOnFailure: Missing property object `requests.memory` - value should be within the accepted boundaries recommended by the organization.

- identifier: CONTAINERS_MISSING_MEMORY_REQUEST_KEY

messageOnFailure: Missing property object `requests.memory` - value should be within the accepted boundaries recommended by the organization.

- identifier: CONTAINERS_MISSING_CPU_REQUEST_KEY

messageOnFailure: Missing property object `requests.cpu` - value should be within the accepted boundaries recommended by the organization

- identifier: CONTAINERS_MISSING_MEMORY_LIMIT_KEY

messageOnFailure: Missing property object `limits.memory` - value should be within the accepted boundaries recommended by the organization

- identifier: CONTAINERS_MISSING_CPU_LIMIT_KEY

messageOnFailure: Missing property object `limits.cpu` - value should be within the accepted boundaries recommended by the organization

As you can tell, I have set different policies for my staging environment and for my production environment. Instead of duplicating policies, you could either run both files before deploying to production or only allow them to deploy to staging if all the policies have passed.

This file is going to be used to tell Datree about the policies that we want to have in place for different environments. This file is not going to be used to check the policies e.g. during our CI/CD pipeline build.

Apply the policies

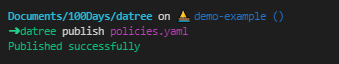

We want to let Datree know of our changing policies. This can be done with the following command.

datree publish policies.yaml

Note that if it cannot find the command, it likely is the case that you had Datree installed before. Make sure to update Datree by following the installation instructions.

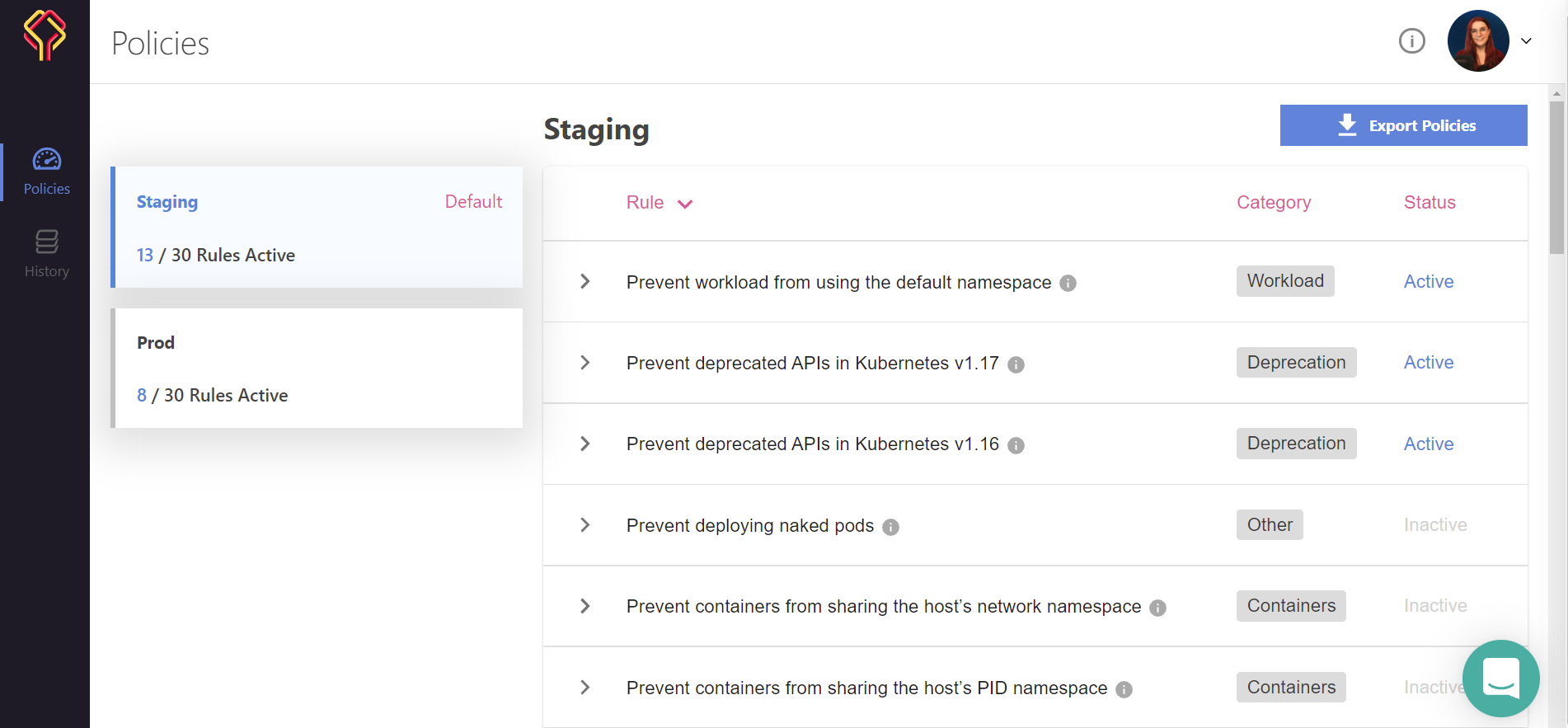

Once you run the command, we should see our new policies within the UI:

In this case, you will not be able to change the policies in the UI like discussed earlier in this post.

Test Kubernetes manifests for misconfiguration

I want to deploy my containerized application to my Kubernetes cluster. However, before doing so, I want to ensure that it does not contain any misconfiguration and complies with the policy rules that I set earlier.

This is my deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: react-application

spec:

replicas: 2

selector:

matchLabels:

run: react-application

template:

metadata:

labels:

run: react-application

spec:

containers:

- name: react-application

image: anaisurlichs/react-example-app:7.0.0

ports:

- containerPort: 80

imagePullPolicy: Always

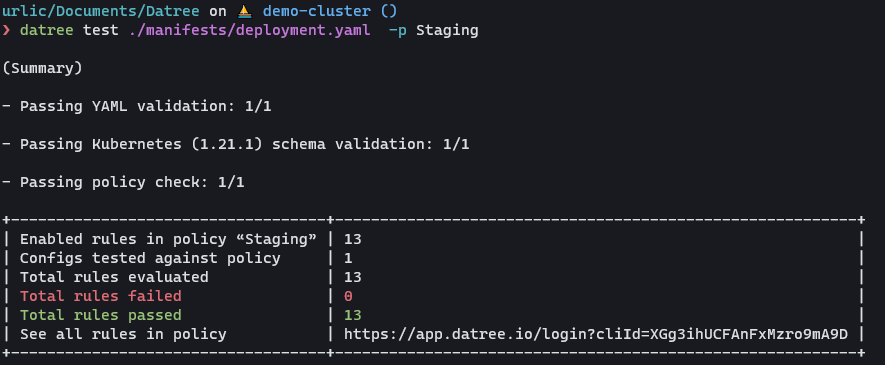

Let's run the following command to test our deployment manifest:

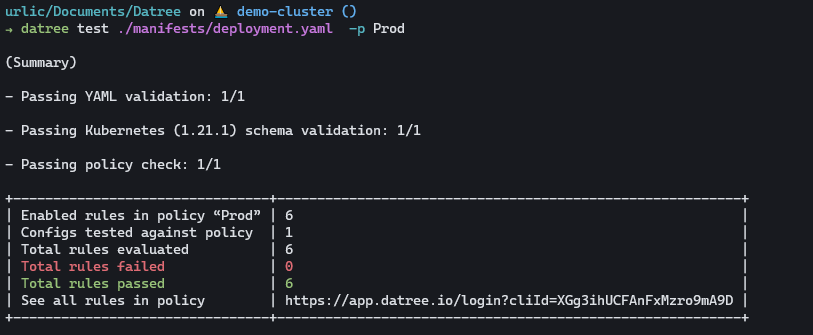

datree test ./manifests/deployment.yaml -p Staging

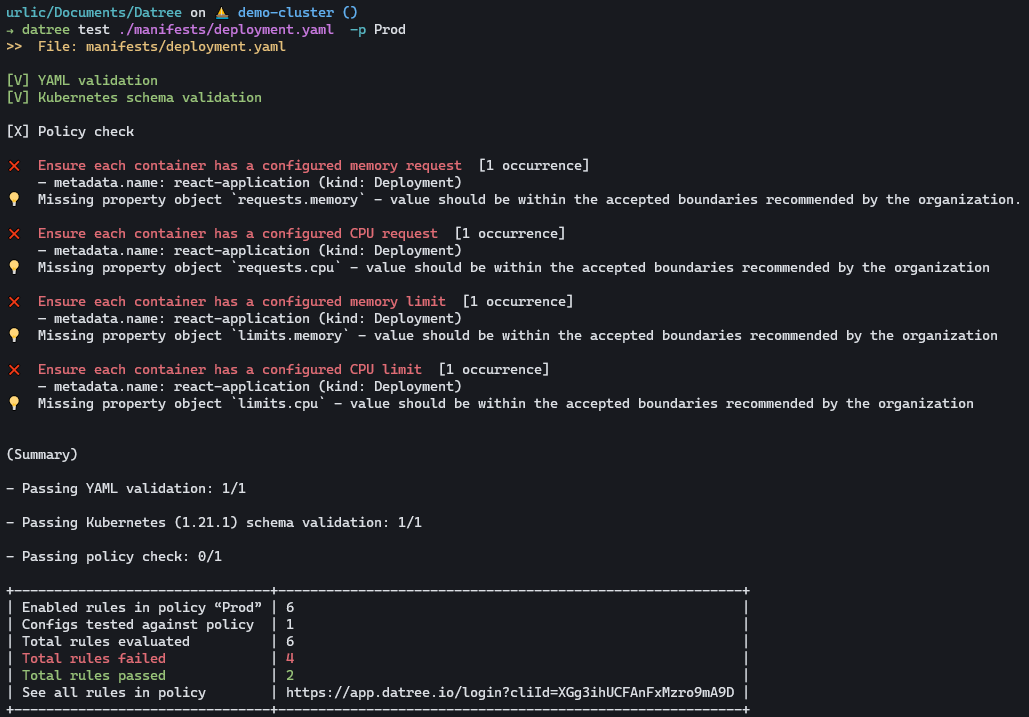

datree test ./manifests/deployment.yaml -p Prod

As you can see, 6 of our rules have failed. Now we are going to correct our Kubernetes manifest for our deployment so that our rules pass. This is the updated deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: react-application

spec:

replicas: 2

selector:

matchLabels:

run: react-application

template:

metadata:

labels:

run: react-application

spec:

containers:

- name: react-application

image: anaisurlichs/react-example-app:7.0.0

ports:

- containerPort: 80

imagePullPolicy: Always

resources:

limits:

memory: 512Mi

cpu: 500m

requests:

memory: 256Mi

cpu: 500m

Once you make those updates to your deployment.yaml, all of the policies that we had defined earlier should pass:

To access our application, we can use the following service.yaml

apiVersion: v1

kind: Service

metadata:

name: react-application

labels:

run: react-application

spec:

type: NodePort

ports:

- port: 8080

targetPort: 80

protocol: TCP

name: http

selector:

run: react-application

Assuming you are connected to a Kubernetes cluster, you can now apply both resources.

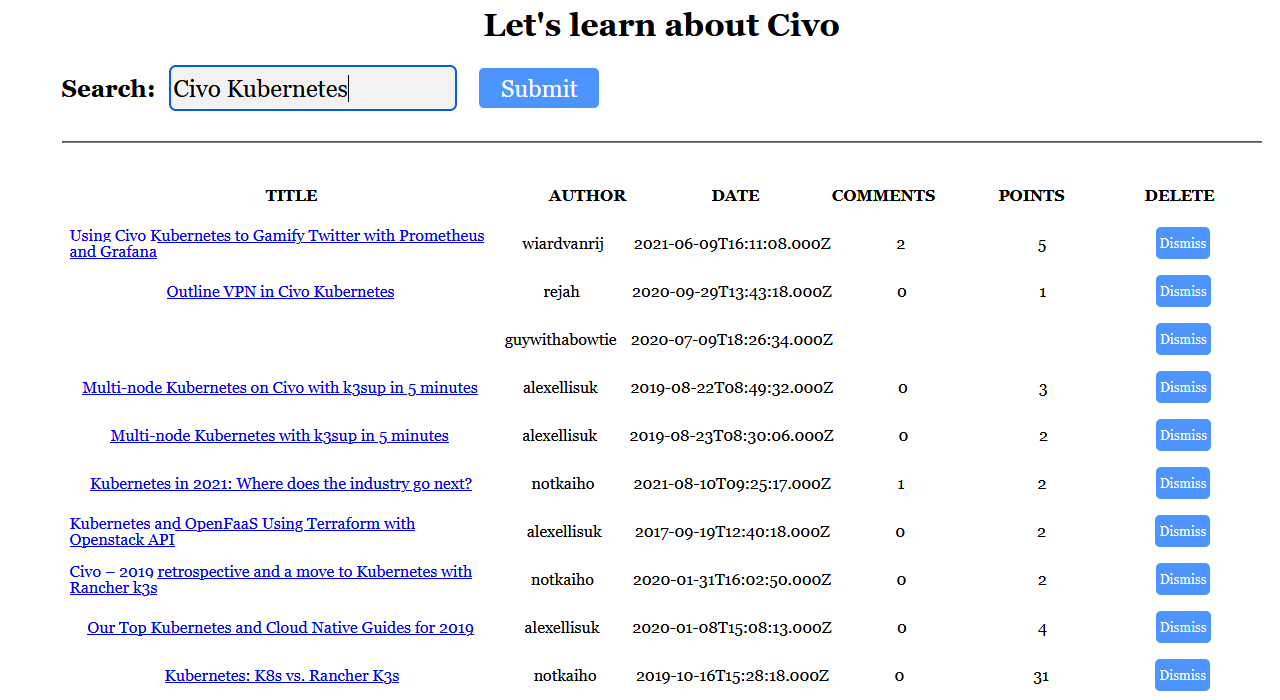

If you do not have a Kubernetes cluster available, either sign-up to Civo.com and create a free account today (you will get $250 worth of credit upon sign-up) or create a kind cluster:

brew install kind

kind create cluster datree

Alternative installation options can be found in the KinD documentation.

Now create a new namespace. In our case, we are going to call it datree:

kubectl create ns datree

kubectl apply -f deployment.yaml -n datree

kubectl apply -f service.yaml -n datree

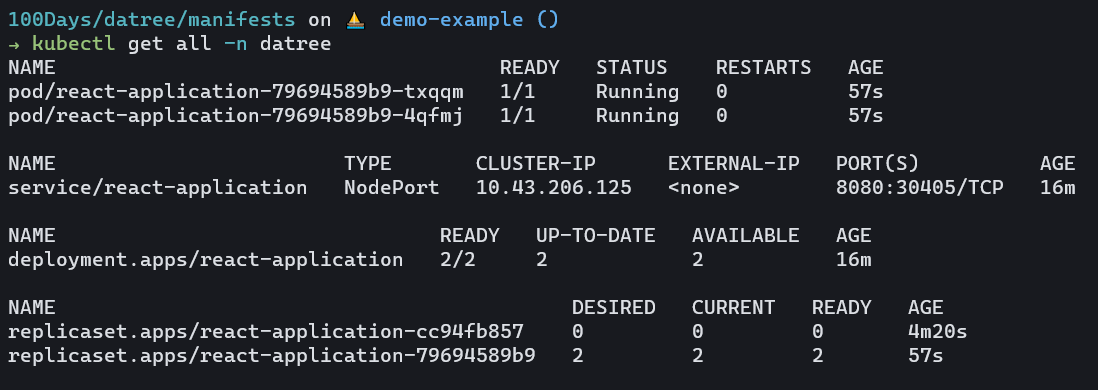

Lastly, we are going to have a look at the resources listed in the datree namespace:

kubectl get all -n datree

You can access the application by running:

kubectl port-forward service/react-application 8080:8080 -n datree

Recap ⏪

In this blog post, we learnt how we can make effective use of Datree to

- define our Policies as Code

- publish our Policies to Datree

- modify our policies

- test our Kubernetes manifests

- improve our Kubernetes manifest

- deploy our corrected Kubernetes manifests to our Kubernetes cluster

If you would like to see more on optimising your deployments, please let me know.

Lastly, make sure to give Datree a GH star and to share this blog post to make sure that others will see it too.

️ If you like Datree, give it a star on GitHub!

Until next time 👋🏼